Blog & Articles

Your ultimate ressource for the creator economy

Methodology & Rankings

About Favikon, rankings, tools & much more.

Insights

The recipe behind Favikon's viral & coveted rankings.

Free tools to power your influencer marketing workflows.

See Favikon users' success stories.

Get access to all Favikon rankings.

Become a Partner

Become an Affiliate

About the team behind Favikon

The place to talk creator economy, together

Featured Rankings

Here is the Top 50 Rising Video Creators on LinkedIn. Video is quickly becoming the platform’s most powerful format, with creators gaining more reach and engagement than ever. As Gen Z grows its presence and tools like BrandLink and Thought Leader Ads support content creation, LinkedIn is doubling down on video. This ranking, made in partnership with OpusClip, celebrates the creators leading this shift and aims to inspire anyone ready to start sharing through video.

Here is the Top 50 Rising Video Creators on LinkedIn. Video is quickly becoming the platform’s most powerful format, with creators gaining more reach and engagement than ever. As Gen Z grows its presence and tools like BrandLink and Thought Leader Ads support content creation, LinkedIn is doubling down on video. This ranking, made in partnership with OpusClip, celebrates the creators leading this shift and aims to inspire anyone ready to start sharing through video.

Who is Peter Slattery?

Peter Slattery has become one of the clearest voices in AI risk and safety. His content blends research, policy thinking, and real world frameworks that people can use.

.png)

Elena Freeman designs partnerships and events at Favikon. She cares about building spaces where creators, brands, and ideas meet in ways that feel real and memorable. From partner programs to community gatherings, she focuses on making connections that spark collaboration and professional growth.

Check Brand Deals

Peter Slattery: The academic voice shaping AI risk in 2026

Peter Slattery has become one of the clearest voices in AI risk and safety. His content blends research, policy thinking, and real world frameworks that people can use. He speaks with calm authority and never loses the human side of the conversation. This makes his work stand out in a field that often feels abstract.

1. Who he is

Peter is an AI Risk Management Leader at MIT FutureTech. His work focuses on AI governance, long term risk, and technical safety. He shares structured research, frameworks, and reports that help institutions understand real AI threats. His posts often come from his own publications or collaborations with leading researchers. With roots in academia and a strong policy foundation, he positions himself as a bridge between research communities and decision makers. His credibility comes from deep knowledge and consistent clarity.

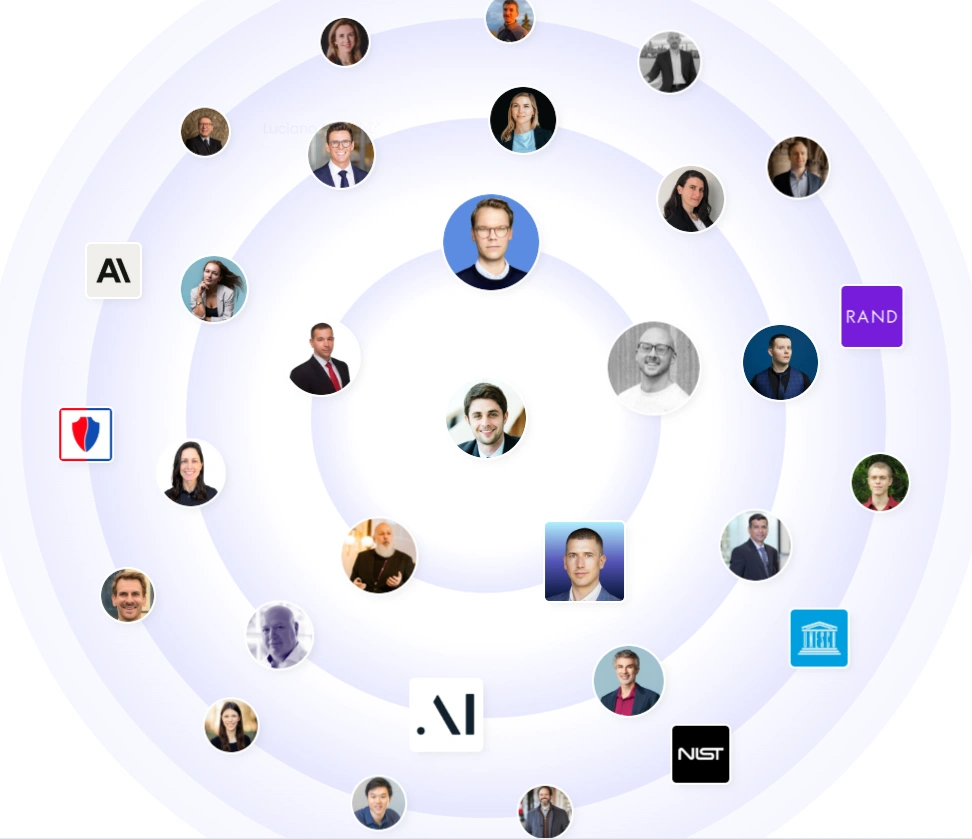

2. A network of heavyweights

Peter interacts with top researchers, AI governance teams, international policy institutions, and key thought leaders in advanced AI. His network spans MIT, RAND, NIST, and global safety communities. He also sits close to other creators shaping the future of AI education and safety. This network boosts his visibility and strengthens the impact of every post he shares.

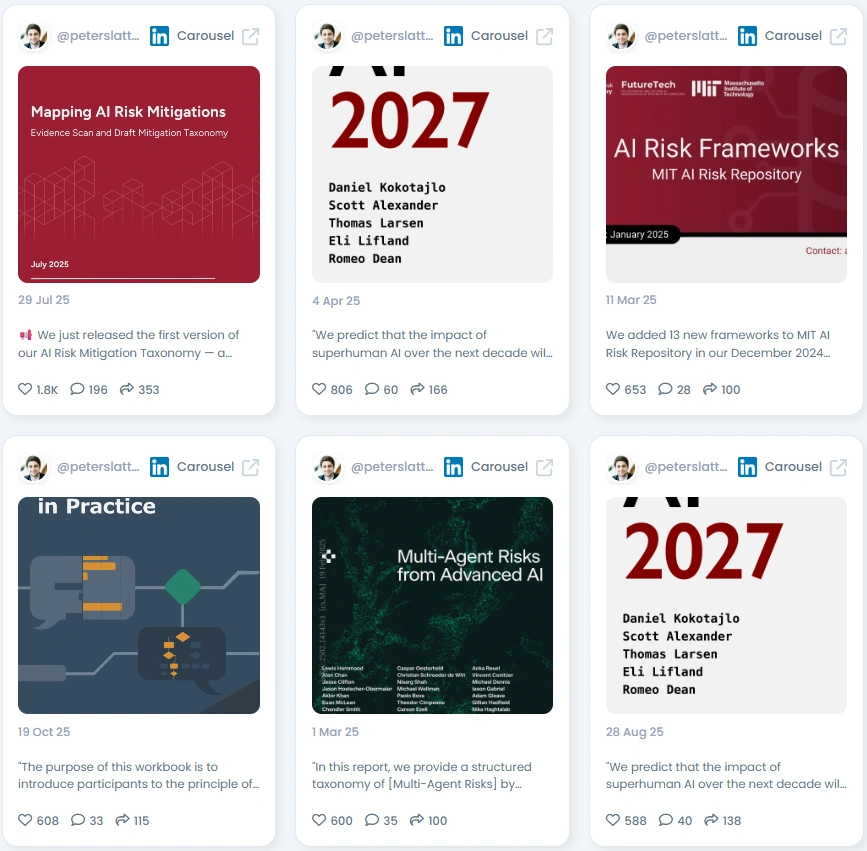

3. Why people listen

Peter writes with a calm and structured tone, avoiding hype and emphasizing what is measurable and useful. His reports are clear, and his explanations are practical, making complex topics easier to understand. This approach builds trust, especially on high-stakes subjects like model evaluation or multi-agent risk. People engage with his content because it answers real questions with real data.

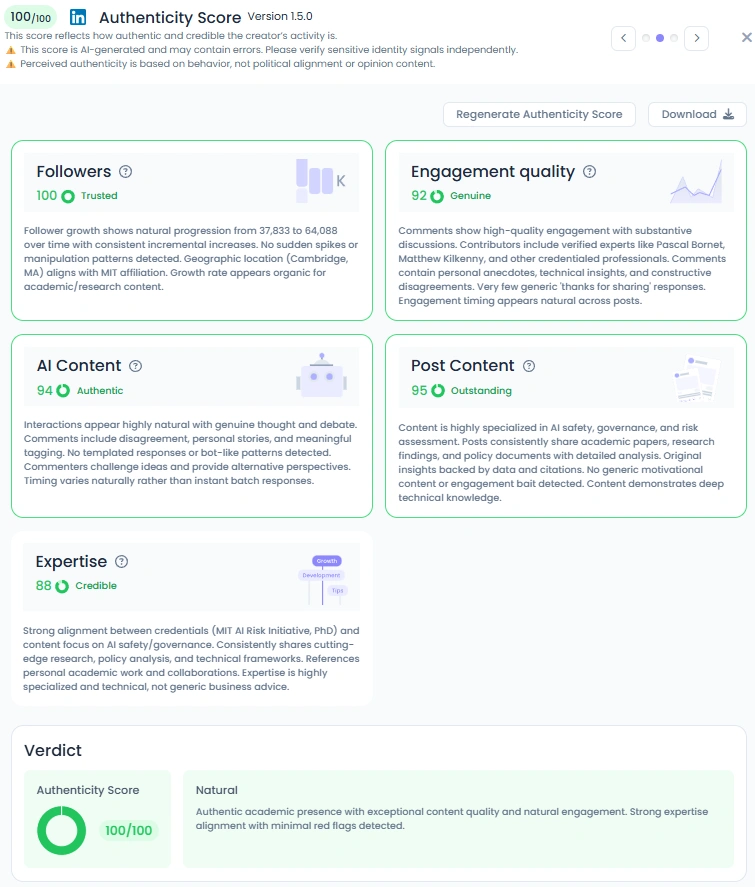

4. Authenticity that resonates

Peter holds a perfect Favikon Authenticity Score of 100 out of 100. His audience is made up of researchers, engineers, and policy experts who comment with thoughtful insights. He shares original reports and frameworks, not flashy motivational content. His engagement shows real debate and real curiosity. Everything feels natural and aligned with his academic background.

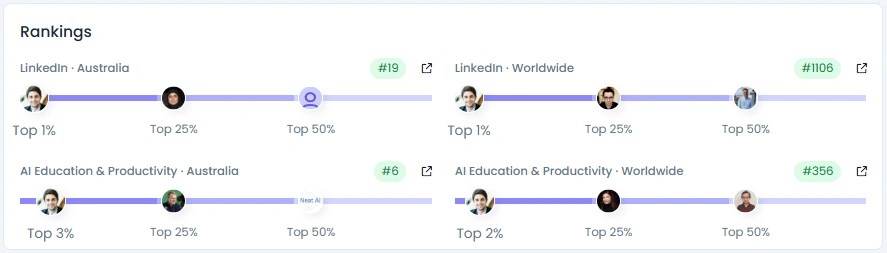

5. Numbers that back it up

Peter has grown steadily from 37,833 to over 64,000 followers with no artificial patterns. His engagement quality is strong, often filled with technical comments and expert discussions. His Influence Score sits at 7,632 points, placing him in the top 1 percent on LinkedIn in Australia. He posts detailed reports and carousels that keep his audience informed and involved.

6. Collaborations that matter

Peter shares ongoing work at MIT FutureTech and connects with researchers at leading global institutions. His publications include AI risk frameworks, mitigation taxonomies, and multi agent system analyses. These collaborations amplify his work and give his content a long academic tail. He is part of projects that shape how governments and labs think about safety.

7. Why brands should partner with Peter Slattery

Working with Peter makes sense for brands that value expertise and credible thought leadership. He is a strong fit for institutions working in AI safety, governance, or risk.

- Thought leadership campaigns about safe AI development

- Speaking engagements or roundtable discussions

- Educational reports or co written frameworks

- Policy aligned content for companies launching responsible AI products

8. What causes he defends

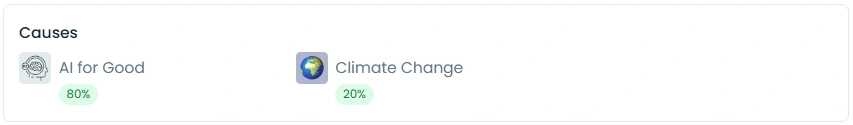

Peter is a strong advocate for AI for Good and actively contributes to discussions aligned with climate and social impact. His posts emphasize how developing AI safely can deliver long-term benefits for society. He regularly shares research that informs responsible governance and ensures public interest remains central. Through his work, he demonstrates a clear commitment to ethical progress and sustainable innovation.

9. Why Peter Slattery is relevant in 2026

AI safety has moved from being a niche concern to a global priority. Peter is at the center of this movement, offering clear thinking and consistent research output. As companies and governments face growing uncertainty around AI risks, his frameworks provide the guidance they need to act quickly and confidently. His influence continues to grow as AI systems become increasingly capable and complex.

Conclusion: A steady voice in a fast World

Peter Slattery brings depth and calm to a field evolving at high speed. He proves that credible research can be accessible and engaging. His work supports the people building the future and the people regulating it. In 2026, his voice remains one of the most trusted in AI risk and governance.

Related Articles

See all the articlesResources

.png)